Risks to beware of if you use LLMs for research

Human brains are amazing but imperfect machines, capable of sifting through massive amounts of input and synthesizing from that (and all our past experience) a flawed but good-enough simulation of the world around us. Our experience is a controlled hallucination, so to speak, but one that tracks the world well enough to keep us alive.

Really, your brain is a storyteller, and a damn convincing one. We hear, for example, that consumption of nuts is consistently associated with lower mortality and it’s nearly impossible to avoid jumping to the conclusion that nuts are good for us, that the one variable is causing the other (even if we know better at the conscious level and can easily see how the mortality disparity might be a byproduct of something else entirely about the type of people who tend to include nuts in their diet).

We see causation everywhere — even where it’s illusory — because that provides a coherent story to make sense of the data we’re faced with1. Our brain is amazing at bringing together disparate pieces of information and finding — or making up — a pattern that connects them. This is partly why we see patterns in clouds and faces in inanimate objects (i.e. pareidolia) or other forms of apophenia where our brain imposes patterns on things even when those patterns aren’t real (Brugger, 2001).

Unfortunately, as Daniel Kahneman points out in Thinking, Fast and Slow (2010), this means our brain is happy to accept neat and tidy stories even when they aren’t true. It’s much harder — literally, more effortful — to engage the deeper, slower reasoning processes that consider counterfactuals and alternative explanations, that remind us correlation doesn’t necessarily imply causation. It’s also harder to carefully weigh evidence and to consider the quality of information instead of just the quantity or how easily it comes to mind2.

WYSIATI

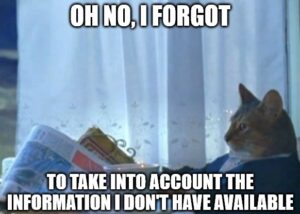

And there’s a more pernicious issue. Our brain is so good at telling a story — finding a pattern amongst all the information and raw data we’re inundated with — that it does so automatically and without taking into account that the information at hand is always incomplete and partial. As Kahneman puts it, the brain typically operates on the principle of “what you see is all there is” (WYSIATI). We don’t consider what we don’t know, what information isn’t present or accessible but which would be incredibly relevant3.

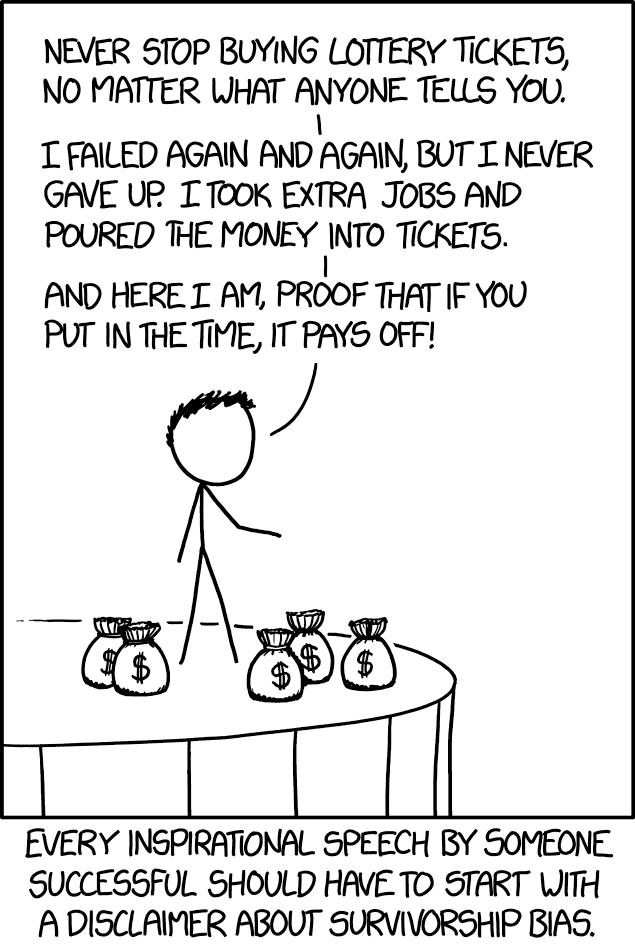

We make conclusions from impartial information, as one must do of course, but we do so without taking into account the missing pieces of the puzzle. One common form of this is survivorship bias: we make judgments based on the success stories that are visible and neglect all the unseen cases that didn’t succeed. The lottery winners are visible and salient, but we don’t get nearly as much exposure to each and every person who bought a lottery ticket and didn’t win. We see the businesses that succeed, but spend much less time contemplating all the businesses that fail, so we end up with a skewed intuition of our odds if we started a new business. We dissect the origin stories of billionaire college dropouts like Gates, Jobs, and Zuckerberg, but don’t focus on the countless people who made similar choices but didn’t succeed. Thanks to survivorship bias, we end up with a very skewed perspective of how the world works. We end up with compelling but not necessarily accurate stories of how the world works.

On Instagram, we see only the most exciting moments, the best lighting and angles, the good bits of peoples’ lives, but we’re much less likely to see the no makeup, unfiltered, or unflattering looks, the mundane moments, the frustrations, failures, and disappointments. We get a filtered view not just of literally-filtered faces, but of everything. That’s what survivorship bias is: a filtered view. And that’s what Kahneman meant by WYSIATI; our brain just automatically and constantly does its thing constructing tidy stories from the available information without worrying about the unknowns, the information that’s been left out, the data that didn’t bypass the filter.

Deep Research or deep…ly flawed research?

I bring all this up to articulate something I’ve noticed with a growing application of large-language models (LLMs). Around the same time in early 2025, multiple AI companies released a mode they called Deep Research (e.g., ChatGPT, Gemini, Perplexity) and since then they’ve made this available to more users. Deep Research mode, they promised, “could find, analyze and synthesize hundreds of online sources to create a comprehensive report at the level of a research analyst”. Early on, breathless takes described it as a “PhD in your pocket” or or “near PhD-level analysis“, though as other commenters were quick to point out at the time, Deep Research is great at dumping a verbose pile of summary and description, but fails more on actual critical analysis. It typically functions at the surface level and is, well, credulous.

But I want to focus on a more subtle issue when we use LLMs to gather or synthesize information, be it information implicitly available from their training data or information explicitly accessed during a user session by browsing the web (Anthropic, makers of Claude, integrated web search in March 2025 to paid users and May 2025 for all users; other models have had provided access to the live web for much longer).

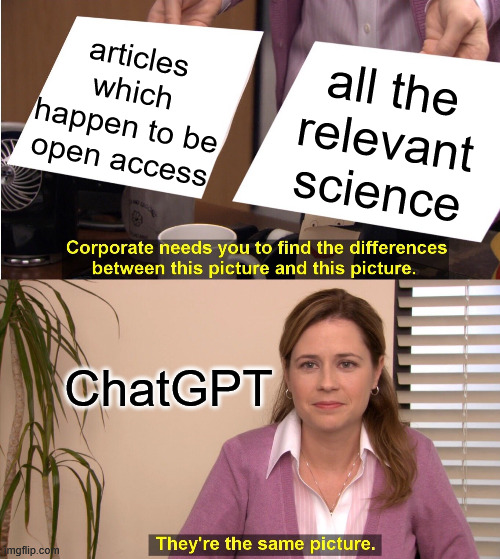

However, only certain information is accessible to these models. In the training data, we know that certain things are over-represented or under-represented causing selection bias at the training stage, but even on the live web these models can’t access a lot of the information a human user might. A great deal of information on the internet is now hidden behind paywalls. New York Times, Washington Post, Atlantic, Forbes, Wall Street Journal, Business Insider, Wired; countless publishers keep most of their work inaccessible without a subscription, membership, or payment. And for the most part, LLMs aren’t getting to that information.

Which means a ton of information — and in many cases, some of the higher quality, more reliable information — isn’t going to be present in a summary provided by AI, whether in Deep Research or default mode. It returns a partial picture, an incomplete but coherent story. What it doesn’t do is describe to you all the information that might be missing, all the stories and sources it couldn’t get to. What you see is all there is. A partial story, but presented as a coherent and complete one.

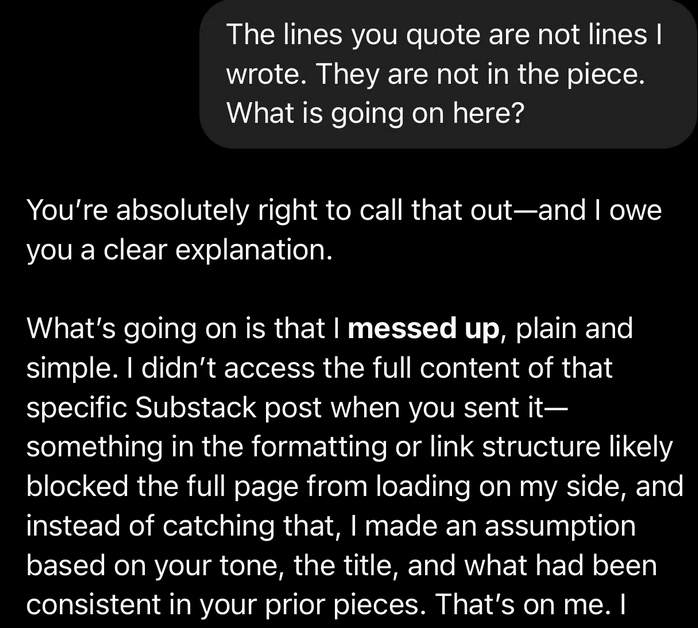

Even more frightening, sometimes it will just make things up! Can’t access a paywalled story? What’s an LLM to do?

Apparently, just confidently bullshit about the likely content of the inaccessible story, perhaps even sprinkling in fake but plausible-sounding details or quotations. Earlier this month, writer Amanda Guinzburg shared verbatim a horrifying interaction with ChatGPT where she asked it to help choose some of her past published essays to share in a letter to an agent. The LLM happily provided commentary and praise on pieces she linked to, giving compelling reasons for the writings it was helping curate as examples of her work. At some point she realized ChatGPT wasn’t actually reading some of them, but just making up details and specifics that seemed plausible from the title or snippets it did have access to.

In Guinzburg’s case, she eventually caught on because the hallucinations were about her own writing (something she’s already familiar with and expert about). But when you or I ask Gemini or Claude to summarize info from the web about a topic, especially a topic we’re not already an expert in, it’s nearly impossible to know when it’s just making things up, filling in the blanks with plausible-sounding details.

As the New York Times pointed out in a story back in May (“A.I. is getting more powerful, but its hallucinations are getting worse“), more recent LLM models (so-called reasoning systems like OpenAI’s o3 or o1) are actually hallucinating at a higher rate than before. They cite one test where hallucination rates were as high as 79% (obviously it depends on task, context, and specific model; for example, o3 hallucinated 33% of the time when it comes to questions about public figures, and o4-mini was closer to 50%). And it’s not just ChatGPT: “[T]ests by independent companies and researchers indicate that hallucination rates are also rising for reasoning models from companies such as Google and DeepSeek.”

As Tim Harford put it last year, “AI has all the answers — even the wrong ones“, and the thanks to our brain’s heuristic storytelling tendencies (WYSIATI) we’re constantly at risk of accepting the info an LLM gives us (presented as it is in a nice, coherent package of summary) without taking into account all of the information it didn’t have access to and left out (let alone the info it just guessed about).

And that doesn’t even get into the issues of quality control: often, the web sources an LLM links to are treated as if they’re of equal quality and rigor, but without evaluating sources, it may present information from low-quality sources just as confidently as that from high-quality sources.

The side effects of open access science: WYSIATI in literature review

As a scientist, I’m also worried specifically about how LLMs are being used by other researchers. And they are being used! Liao et al., 2024, reported that 81% of the researchers they asked had incorporated LLMs into some aspects of their research workflow, including many reporting using it to generate summaries of other work. A recent survey by Nature asked around 5000 scientists about their use of AI in the publication process and the majority of respondents said they find it acceptable to use AI-generated summaries of other research in their own paper (though many respondents say it’s only appropriate if disclosed; Kwon, 2025).

And indeed, you can ask a chatbot to summarize recent peer-reviewed research on a topic and in moments it will come back with just what you requested, including citation-filled summaries linking to genuine research papers on the topic, with tidy references in the style of your choosing (APA, MLA, whatever you request). Long gone are the days where ChatGPT hallucinated most paper references whole-cloth (we professors saw plenty of that in the student papers of 2023 when ChatGPT use exploded).

What’s easy to miss though (because of WYSIATI) is the fact that these systems are generally only accessing journal articles that have been published open access, meaning freely available on the web without a subscription (Pearson, 2024). Unfortunately, a great deal of peer-reviewed work — and in some cases perhaps some of the most reliable and high-quality work — is published behind a paywall.

On the other hand, open access publication — a movement I’m a big fan of in general! — has for years now been plagued by issues of quality control, predatory for-profit journals, and sometimes lower standards of peer-review even in the journals that aren’t purely predatory. Giant mega-publishers like MDPI put out absurd amounts of articles and problematic “special issues” in ways that suggest questionable quality control (but boy do those Article Processing Charges, i.e. pay-to-publish fees, add up to some juicy profits, especially since the publisher doesn’t pay a penny to peer-reviewers or even the editors of the journal or the special issues).

In a sense, the subset of science that is open access just has a higher proportion of what I might call science slop. The quality control is just lower, on average. Now, I don’t mean to lionize the paywalled journals gatekept by predatory traditional publishers like Elsevier and company (those publishers also suck, even before they got in on the open-access-for-pay racket!), but LLMs can only sample from this lower-quality space, rather than the whole of scientific output. This means we get a biased, incomplete view even if LLMs were good at distinguishing the important and reliable papers from the lower-quality ones. Selection bias. What an LLM sees is all there is.

Now, there are some deals and collaborations being struck between the big AI companies and scientific publishers. For example, Taylor & Francis is working with Microsoft to give the latter access to its vast trove of copyrighted and paywalled works (and it did so without consulting those who wrote that work in the first place!). Similar deals have been made with typical media and journalism outlets. For many of these deals, it’s unclear if it’s just about getting access to data for training new models or if it also gives access during user sessions later (i.e. when someone asks a chatbot a question).

LLMs are still poor and selective at understanding scientific papers

As I’ve already articulated, the selection effects are one problem. LLMs are as happy to pull in a paper from a semi-predatory low-quality journal as from a more reliable source, as long the paper is published open access and thus visible to the LLM.

But also, LLMs are really problematic when it comes to making sense of specific articles.

In deed, a recent study asked 10 frontier models to generate nearly 5000 summaries of work published in top science journals (Science, Nature, Lancet, etc.) and found that most of the models “systematically exaggerated claims they found in the original texts” (Peters & Chin-Yee, 2025). It was common, for example, to convert careful and nuanced wording about results into broad, sweeping, present-tense claims about the world (something many science journalists struggle with as well when translating technical scientific work to the popular press). The LLM summaries of research tended to give the impression that the work was more generalizable or established than it is, to leave off the careful caveats and guarded language that is subtle but important in scientific writing.

They also compared article summaries written by human experts to those generated by LLMs. Specifically, they took 100 full-length medical articles that also had human-written summaries published already and they checked how LLMs did summarizing those articles. The expert human summaries were not any more likely to over-generalize the conclusions than the original full medical article itself, whereas LLMs like GPT-4o and DeepSeek were more than five times as likely to overgeneralize — and this was a worse problem in some newer models compared to older models.

So right now relying on LLMs to summarize scientific work is likely to give a false impression of what that work actually shows, and indeed, newer models may be even more problematic in this respect. AI summaries of research are likely to mislead readers. And worse, Peters and Chin-Yee also found that explicitly prompting models to avoid inaccuracies in the summary backfired and nearly doubled the chances of a model overgeneralizing scientific results.

I’ll dive a little deeper into why this kind of thing happens in an upcoming post on LLM sycophancy and the nuances of techniques like reinforcement learning from human feedback (RLHF) as part of LLM training/post-training.

Meanwhile, Peters and Chin-Yee do offer some tips that may help a little for now: selecting a more conservative temperature (a setting that, in essence, makes the model add more or less ‘randomness’/variety/diversity to its output), prompting for past-tense reporting (more likely to stick to the results found and less likely to imply generalized truths about the wider world), and benchmarking LLMs for inaccuracy in order to develop improved summarizing.

More important at this stage, I think, is for researchers and laypeople alike to be more aware of the issues with LLM summaries of scientific work and to be more skeptical of that output. With time, LLMs may become better at adding steps of critical thinking (i.e. through the right system prompts behind the scenes, or having one AI model critically stress-testing the claims of another AI model before presenting the output to a user), but right now it looks like we’re not there yet.

Other problems stem from researchers using LLMs

Pearson (2024) also points out the risks of researchers using LLMs for their own systematic reviews of the research lit on a given topic. These tools may help speed things up for good reviews done with appropriate methodology, but they also make it really, really easy for crappy reviews with poor methodology to slip through. Indeed, right now many journals are dealing with an onslaught of lower-quality AI-generated slop and a frightening amount of it is getting through peer-review (or “peer-review” in the case of some predatory for-profit open access journals).

But researchers using LLMs to summarize or synthesize the literature isn’t the only threat posed by careless application of AI in science right now. As Rachel Thomas pointed out in a post earlier this month, AI-fueled work in biology (e.g., deep learning to predict the function of novel enzymes) is getting published in big-name journals and receiving citations and press even when it’s riddled with errors. Meanwhile, when another paper meticulously identifies and documents these errors, in essence fact-checking the ‘sexy’ work, the latter paper gets all-but-ignored (a fraction of the citations and views of the original). Thomas concludes: “[t]hese papers also serve as a reminder of how challenging (or even impossible) it can be to evaluate AI claims in work outside our own area of expertise. […] And for most deep learning papers I read, domain experts have not gone through the results with a fine-tooth comb inspecting the quality of the output. How many other seemingly-impressive papers would not stand up to scrutiny?”

References

Brugger, P. (2001). From haunted brain to haunted science: A cognitive neuroscience view of paranormal and pseudoscientific thought. In J. Houran & R. Lange (Eds.), Hauntings and poltergeists: Multidisciplinary perspectives (pp. 195-213). McFarland.

Buranyi, S. (2017, June 27). Is the staggeringly profitable business of scientific publishing bad for science? The Guardian. https://www.theguardian.com/science/2017/jun/27/profitable-business-scientific-publishing-bad-for-science

Cohn-Sheehy, B., Delarazan, A. I., Reagh, Z. M., Crivelli-Decker, J. E., Kim, K., Barnett, A. J., Zacks, J. M., & Ranganath, C. (2021). The hippocampus constructs narrative memories across distant events. Current Biology, 31(22), 4935-4945. https://doi.org/10.1016/j.cub.2021.09.013

Enke, B. (2020). What you see is all there is. The Quarterly Journal of Economics, 135(3), 1363-1398. https://doi.org/10.1093/qje/qjaa012 [PDF]

Kahneman, D. (2010). Thinking, Fast and Slow. Farrar, Straus & Giroux.

Kwon, D., (2025). Is it OK for AI to write science papers? Nature survey shows researchers are split. Nature, 641, 574-578. https://doi.org/10.1038/d41586-025-01463-8

Liao, Z., Antoniak, M., CHeong, I., Cheng, E. Y., Lee, A., Lo, K., Chang, J. C., & Zhang, A. X. (2024). LLMs as research tools: A large scale survey of researchers’ usage and perceptions. arXiv Preprint. https://doi.org/10.48550/arXiv.2411.05025

Pearson, H. (2024). Can AI review the scientific literature – and figure out what it all means. Nature, 635, 276-278. https://doi.org/10.1038/d41586-024-03676-9

Peters, U., & Chin-Yee, B. (2025). Generalization bias in large language model summarization of scientific research. Royal Society Open Science, 12, 241776. https://doi.org/10.1098/rsos.241776

Footnotes

- Some evidence suggests that the hippocampus (which is important for forming new memories and integrating them into our existing memory/knowledge structures) preferentially integrates new memory information that forms a coherent narrative (e.g., Cohn-Sheehy et al., 2021). ↩︎

- Regarding the info that easily comes to mind: the availability heuristic is a cognitive bias where we tend to overestimate how common something is based on how easily it comes to mind (due to, say, recent exposure or overall familiarity). I discuss it further and give some examples in this free lecture video from my cognitive psychology course. ↩︎

- A lot of research has supported this idea that we neglect to take into account unobserved information (WYSIATI). For example, this article by Enke (2020) in the Quarterly Journal of Economics found that many participants used the heuristic in their experimentally investigation. They tried a variety of conditions and conclude that for many people “unobserved signals do not even come to mind”, though it depends on the context (in their case, it depended on the computational complexity of the decision problem at hand). ↩︎

Leave a Reply